What Happens When AI Can Write All Your Software?

A few weeks ago, while refactoring some of my old code, I asked an LLM to write a RingBuffer implementation in TypeScript. I’ve written these before in other languages. I know the tricky edge cases, especially around concurrency. To my surprise — and mild horror — the LLM wrote it flawlessly. Better than I could have. No feedback necessary. No vibing at all.

Then I asked it to build something slightly more ambitious: a personal CRM.

It fell apart. Buttons didn’t work. When they did work, the backend threw exceptions. I eventually vibe-coded the fixes and got it running. But when I looked at the backend code, it read like something an undergraduate might write — technically correct in places, but with no sense of how the pieces should fit together.

How can this be? The same system that writes better low-level code than me produces amateur work on what should be a straightforward application?

I think the answer is complexity.

Note that both prompts were equally vague. “Create a RingBuffer” isn’t much more specific than “Create a personal CRM.” The difference isn’t in the specification — it’s in the internal complexity of the task itself.

A RingBuffer is self-contained. It has a few moving parts, clear boundaries, well-defined behavior. A CRM, even a “simple” one, is a web of interconnected concerns: data models, API design, state management, UI flows, error handling, validation — all of which need to work together coherently.

My hypothesis: humans are remarkably good at context-switching. When I’m looking at a React component, my attention is tuned to one set of concerns. When I jump to database code, I activate a different mental mode. I can hold the big picture while zooming into specifics, and I can do this dozens of times per hour without losing the thread.

Current LLM architectures seem to struggle with this. Maybe it’s a training issue. Maybe it’s architectural. I don’t know. But the pattern is consistent: small, contained tasks get done brilliantly. Complex, interconnected systems fall apart.

If this is true, what does it mean for the future of software?

LLMs will commoditize the parts of software development that have lower complexity. They’ll continue to struggle with the parts that have high complexity.

At first glance, I’m tempted to frame this as a frontend vs. backend issue — frontend code is often considered less complex than backend code. But I don’t think that’s actually right. There are plenty of frontends that are extremely complex with lots of interconnected parts, while the backend is a simple CRUD database. The principle is simpler: humans will own whatever is complex; LLMs will handle whatever isn’t.

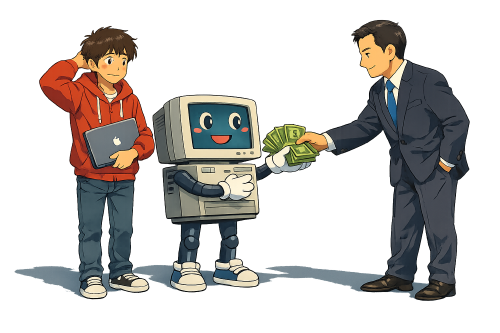

This suggests an interesting development pattern. Imagine your team builds a super-complex-accounting-rules internal package — all the gnarly domain logic, validated and tested by humans who understand the stakes. Then you hand that package to an LLM and say: “Build me an iOS app that uses this.” The LLM treats the complexity like a black box; it doesn’t have to understand it. It just needs to wire up a relatively simple interface.

What might this look like in five years?

I suspect most human developers will be working on complexity — wherever it lives in their stack. Business logic. Integrations. The parts where mistakes are expensive and domain knowledge matters.

Meanwhile, LLMs will be printing out interfaces. Your company’s ERP system will have a human-built core with predictable, accountable logic. But the accounting department might generate their own Windows desktop client while the sales team gets a native iPad app. Because it’s “just” presentation code and minor bugs are acceptable. The bugs are annoyances, not disasters. And when bugs do appear, users can ask the LLM to fix them — without ever touching the core logic owned by human developers.

Counterintuitively, this might be good news for the SaaS giants. If LLMs can’t reliably rewrite Salesforce’s backend — all that accumulated domain logic and integration complexity — then Salesforce’s moat is intact. But now users can print their own interfaces on top of it. The plugin ecosystem might die, but Salesforce would probably make that trade.

Picture what this world actually looks like. Salesforce is still around. Your company still runs on its ERP system. But now you get to choose how you interact with it. Maybe you’re a sales manager who wants a podcast-style summary of yesterday’s numbers to listen to on your commute. Maybe you’re a developer that prefers a CLI to submit your expenses. Maybe you have a visual impairment and need a high-contrast, screen-reader-optimized interface that the vendor never bothered to build.

The complex parts of the stack remain first-class human concerns. But everyone gets to customize their last mile.

I hope I’m right about this. I think this vision is genuinely exciting. I’d love to customize how I interact with the big software systems I’m stuck using.

But maybe I’m wrong. Maybe the big vendors will try to block LLM-based integrations — that seems like the obvious knee-jerk reaction. Or maybe LLMs will keep advancing until they can handle the complex parts too, and actually put Salesforce out of business.

Who knows. I just have a guess about what the next few years look like. And my guess is that complexity is the bottleneck — for now.